Why a Data Quality Framework for the enterprise?

Just as an airport uses strict protocols to ensure the safety and efficiency of its operations, the enterprise requires a robust framework to manage data quality. This framework allows the enterprise to maintain and improve data quality, supporting our ability to innovate, personalize user experiences and continually improve our services.

- The Data Quality framework for the enterprise was designed to address the following issues:

Low level of maturity on Data Quality at an enterprise level,

Poor Data Quality in several areas of the business,

Negative impacts of Data Quality on business,

Fragmented global approaches to Data Quality due to non-existing standards at enterprise level.

Problem statement

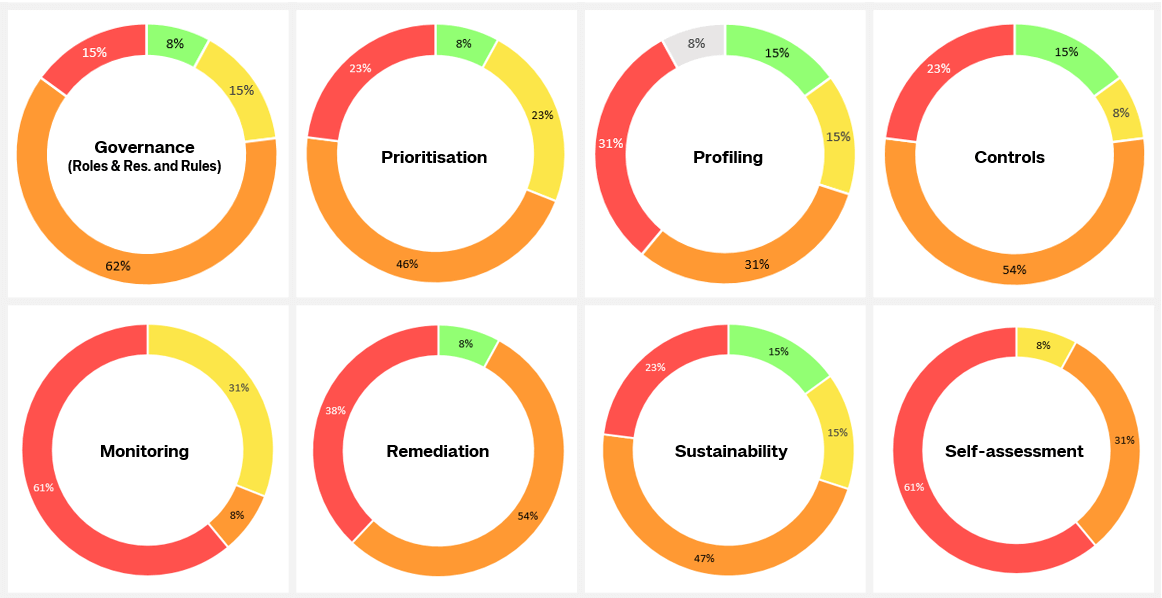

The Data Quality maturity assessment study conducted in Q3 2024 shows important gaps in existing Data Quality practices:

Data Quality Maturity Assessment Summary

Key findings include the following:

- Governance - In the majority of cases (close to 77%), respondents declare not having:

Roles related to Data Quality and associated communication,

Standardized rules related to Data Quality,

Standardized way to define Data Quality issues impact on business.

- Prioritization - 69% of respondents report not having:

A common way to prioritize data that needs to be controlled based on its relevancy to critical business functions.

- Profiling - In 62% of cases respondents declare that:

Data in their scope is not profiled to have a first level of understanding of its level of quality.

- Controls - Only 23% of respondents report having:

Defined Data Quality metrics,

Implemented Data Quality checks and controls.

- Monitoring - In only 31% of cases respondents declare having:

Normalized way of reporting Data Quality incidents,

Some routine reports on Data Quality that are accessible to key users,

Data Quality thresholds defined in a consistent and common way,

Metrics to track Data Quality progress and drive remediation efforts.

- Remediation - In the vast majority of cases (close to 92%), respondents report not having:

Normalized remediation plans that address the most pressing Data Quality issues,

Standard workflows for Data Quality remediation processes with exception handling and escalation procedures,

Established timelines for ongoing DQ level monitoring and remediation actions.

- Sustainability - Only 30% of respondents declare having:

Structured approach to determine root-cause of DQ incidents,

A common approach to identify and implement corrective / preventive measures to business and/or technology on the basis of root cause analysis.

- Self-assessment - Only 8% of respondents report having:

Regular self-assessment procedures,

Mechanisms to review practices on the basis of self-assessment results.

Following the the enterprise data quality maturity assessment, the results have identified opportunities for improvement.

- Low level of maturity overall:

While some teams within the enterprise have demonstrated a good level of maturity, the global score that resulted from the Data Quality Maturity assessment study indicates an average global score of 1.3 positioned between 1- Initial managed in an ad-hoc manner and 2-Minimum process discipline is in place.

The teams that have showcased some pockets of maturity with rules and practices defined at a local level include Corporate Finance & Administration - Digital & Transformation Services - Data & Analytics (CFA-DTS-DTA), Hospitality BI & Data Product (HOS-BID), Hospitality Media (HOS-M8D-MED) and Travel unit - Travel Distribution - Cytric (TRU-TRD-CYT).

- The findings show following are the top improvement areas.

Monitoring: The majority of teams lack structured and systematic monitoring and reporting capability based on Data Quality control points. Furthermore, Data Quality Tolerances / Thresholds are not defined in a consistent and shared way.

Metrics: Metrics to track data quality progress and drive data quality remediation efforts are, often times, missing.

Profiling: Except few teams who execute Profiling, most teams lack profiling capabilities.

Controls: In most cases, except for some areas, Data Quality controls are not defined in a way that automated and systematic checks are done throughout the Data lifecycle / Data pipeline.

Issue Remediation: Normalized remediation plans with standard assignment workflows, resolution timelines and escalation mechanism are missing in the majority of cases.

Prioritization: Except for a few areas who have established list of critical Data, in the majority of cases, Data is not prioritized on the basis of business criticality.

As a result, Monetizing Data is challenging due to the lack of unified and reliable metrics as well as the absence of transparency mechanisms to make the level of quality visible to consumers.

As illustrated by the figures below, poor data quality has a cost and unfortunately, the Data Quality Maturity Assessment evidence the importance of standards on how to generate, process and leverage the potential of high-quality data in the enterprise.

On average, employees spend 29% of their time on non-value-added tasks due to poor data quality and availability, while leading firms reduce this time down to 5 – 10 %. (Source: McKinsey Global Data Transformation Survey, 2019)

Through 2025, at least 30% of GenAI projects will be abandoned after PoC due to poor data quality, inadequate risk controls, escalating costs or unclear business value. Source: Gartner, 2024.

Key Benefits

- The primary benefits of implementing a data quality framework include:

- Value and satisfaction of Customers and Partners:

Customers receive the correct information.

Build trust by ensuring customers are aware of how their data is being handled.

- Direct internal impact in the enterprise:

Data revenue generation. Allow the development of new products and services based on data.

Reduce operating costs. Lower reporting costs, reduce time spent in non-value-added tasks, enable automation.

Enable Gen AI and other digital transformation use cases.

Success factor for data quality in the enterprise

For the enterprise with different business lines and a low level of maturity, the objective of a data quality framework is balance between standardization and flexibility:

Standardization where common data quality standards can be applied across teams (i.e., roles, data quality dimensions, scorecards & metrics).

Flexibility preserving specific unique policies of Business Units. Policies will depend on specific regulatory requirements, customer expectations, etc.

Key Principles

This data quality framework has been defined based on key guiding principles. These statements are aligned with the enterprise Data Office’s North Star Themes guiding principles:

Business driven: Data quality efforts should be adapted to the importance of data.

Stakeholders’ engagement: Parties that are involved through the data quality lifecycle management should be able to express their needs.

Process approach: Data quality should be managed across the data lifecycle from its creation to its disposal and as it moves between systems. The Data Quality Framework applies to various categories of data including Customer facing data or Internal data and to several use cases including Enterprise Operation (Sales, Finance, etc.), Data Integration, Data Migration, MDM, Analytics, Data Science (Algorithm and Model Training), Data Warehouses and Data Lakes, Operational Databases

Data observability: The data quality framework integrates data observability and data quality . Data quality ensures data accuracy and reliability, while data observability provides real-time monitoring and understanding of data pipelines and workflows to detect issues and ensure data health.

Common metrics Standardization: A unified approach should be shared across teams in a consistent and measurable way.

Transparency and visibility: By defining and enforcing key Data Quality capabilities, the framework also provides a means of communicating the state of Data Quality in a common manner on multiple dimensions.

Poor Data Quality in several areas: currently, poor Data Quality is observed in multiple business across the enterprise.

- Some of the reasons for this situation include:

Limited capabilities & resources,

Lack of ownership and collaboration,

Inconsistencies that are a consequence of data silos,

Growing regulatory requirements.

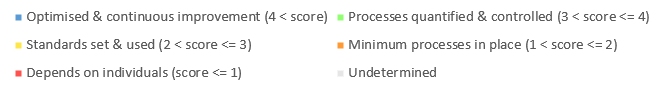

According to the State of Data Quality Report (1) published in 2022 respondents associate poor Data Quality to the following symptoms:

Symptoms of poor Data Quality (1)

Poor Data Quality can lead to various negative consequences on business and teams. Some examples include:

Inaccurate Decision-Making: If decision-makers rely on data that is inaccurate or incomplete, it can lead to poor strategic decisions. For example, a marketing campaign based on faulty customer data may target the wrong audience or offer irrelevant products, resulting in wasted resources and decreased ROI.

Impact on Customer Trust: Inaccurate or inconsistent data can erode customer trust. For instance, sending promotional offers to the wrong addresses due to incorrect contact information can annoy customers and damage the company’s reputation.

Compliance Issues: Regulatory compliance may be compromised if data used for reporting or audits is inaccurate or incomplete. This can result in fines, legal penalties, or reputational damage for the organization.

Operational Inefficiencies: Poor Data Quality can lead to inefficiencies in business operations. For example, inaccurate customer records can result in delays and errors in order processing or customer service.

Missed Opportunities: Inaccurate or incomplete data may cause organizations to miss out on valuable opportunities. For instance, inaccurate market analysis may lead to missed trends or opportunities for innovation, while incorrect customer data may prevent targeted cross-selling or upselling efforts.

Increased Costs: Correcting errors caused by poor Data Quality can be time-consuming and costly. For example, cleaning up inaccurate data, reconciling inconsistencies, and reprocessing incorrect transactions can require significant resources and may result in additional operational expenses.

Objectives of the Data Quality Framework

The Data Quality Framework has been designed to:

Set guidelines and standards,

Define roles and responsibilities for ensuring Data Quality throughout the enterprise business lines,

Define and share common language,

Set unified requirements and measurement mechanisms,

Avoid fragmented approach generating repetitive tasks and misalignment,

Enforce best practices,

Provide tools and templates that abstract away repetitive tasks and common functionalities.

Benefits of the Data Quality Framework

Documenting a Data Quality framework is critical for the enterprise, which heavily relies on the accuracy, availability, and reliability of data in its operations. Here are several reasons why such a framework is essential, especially if the current results are not optimal:

Continuous Improvement: Documentation helps to pinpoint specific areas that require improvement, as the table suggests. Lower scores in particular categories can be a starting point for root cause analysis and the implementation of corrective actions.

Regulatory Compliance: In the travel industry, there are often stringent regulatory requirements regarding data management. A well-documented framework helps ensure that all practices are compliant with laws and regulations.

Customer and Partner Trust: Clear and transparent documentation on how data is managed can boost the confidence of customers and business partners of the enterprise.

Operational Efficiency: Understanding where and how data management processes can be improved can lead to more efficient operations and cost reductions for the enterprise.

Risk Management: Documentation helps the enterprise to identify data-related risks and put in place appropriate controls and remediation measures.

Accountability: By clearly defining roles and responsibilities, the enterprise can ensure that good data management practices are maintained throughout the organization.

Innovation and Product Development: Good Data Quality can pave the way for new business opportunities and improvements in existing products and services.

References: